Neurosearch for Control-Oriented System Identification Applied to Buildings

Team

Aaron Tuor, leader; Jan Drgona, Elliott Skomski, Vikas Chandan

Problem

Energy-efficient buildings are one of the top priorities to sustainably address global energy demands and reduction of carbon dioxide emissions. Advanced control strategies for buildings are a potential solution, with projected energy saving potential up to 28 percent. Current approaches are based on constrained optimal control methods and heavily depend on the mathematical models of the building dynamics. In addition to the high state dimension of building dynamics, occupants and outside drivers, such as weather and electricity fluctuations, create simultaneous interactions of varying degrees, requiring infeasible expenses in time and expertise for wide scale modeling from first principles. Here we present reliable data-driven methods which are cost-effective in terms of computational demands, data collection, and domain expertise; methods which have the potential to revolutionize the field of energy-efficient building operations through wide-scale acquisition of building-specific, scalable, and accurate prediction models. In this project we learn physics-constrained recurrent neural building thermal dynamics with physically coherent generalization from small datasets. We further demonstrate our method on three other nonlinear systems, developing a genetic algorithm approach to search the space of physics-informed, block-structured neural state space models.

Approach

Starting with a commercial building energy dataset, we use control-oriented, data-driven modeling from a family of neural state-space models with robust inductive bias towards predicting building dynamics. We use a neural Hammerstein model with input nonlinearity, linear maps parametrized with eigenvalues modeling the dissipative building thermal system, and constraints in the form of penalty methods to restrict the model’s predictions within physically realizable ranges. To demonstrate the approach’s wide-ranging utility, we devise an asynchronous genetic algorithm for efficient searching over our generally defined design space of physics-informed neural state space models to accurately model three other nonlinear systems: an aerodynamics body, a two tank interacting system, and a continuous stirred tank reactor.

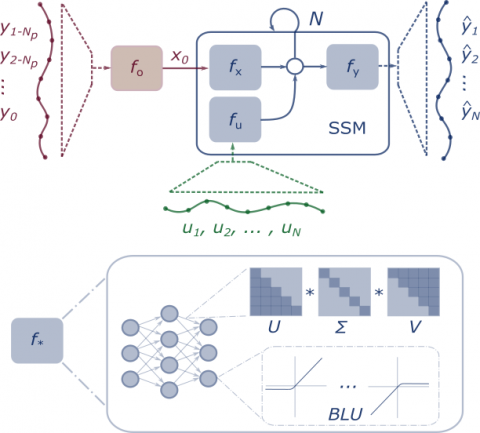

Block nonlinear neural state space model architecture for partially observable systems with Np-step past and N-step prediction horizons.

Results

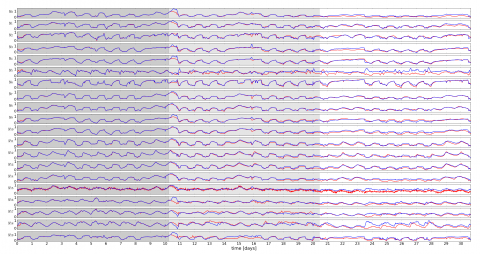

For all four systems, we assessed the open-loop simulation performance of the best-performing physics-constrained and structured recurrent model. For the building model trained from only 10 days of measurement data and simulated over 10 succeeding days, the open-loop mean square error corresponded to roughly 0.45K errors per output—a 50 percent reduction in error from previous state-of-the-art for this building system.

Using an asynchronous genetic algorithm to discover neural network state space models for the other three nonlinear systems, we found physics-constrained models superior to unconstrained black-box recurrent neural networks. These results suggest that our approach holds great promise for application to a wider range of high value, difficult to model systems.

Code, Data, and Related Links

In progress.