Reinforcement Learning for Grid Control

Team

Xinya Li, leader; Yunzhi Huang, Xiaoyuan Fan, Malachi Schram, Qiuhua Huang

Problem

In environments that are only partially observable, with control tasks occurring over a long-time horizon in highly dimensional states and action spaces, deep reinforcement learning (DRL) with agents have been shown to achieve promising results. In energy system domains, several DRL core challenges remain to be explored: scalability, learning from limited samples, and high-dimensional continuous state and action spaces. In this task, we focus on the scalability challenge in coordination with ongoing projects investigating sample efficiency and state space requirements for controlling energy transmission on the electric grid.

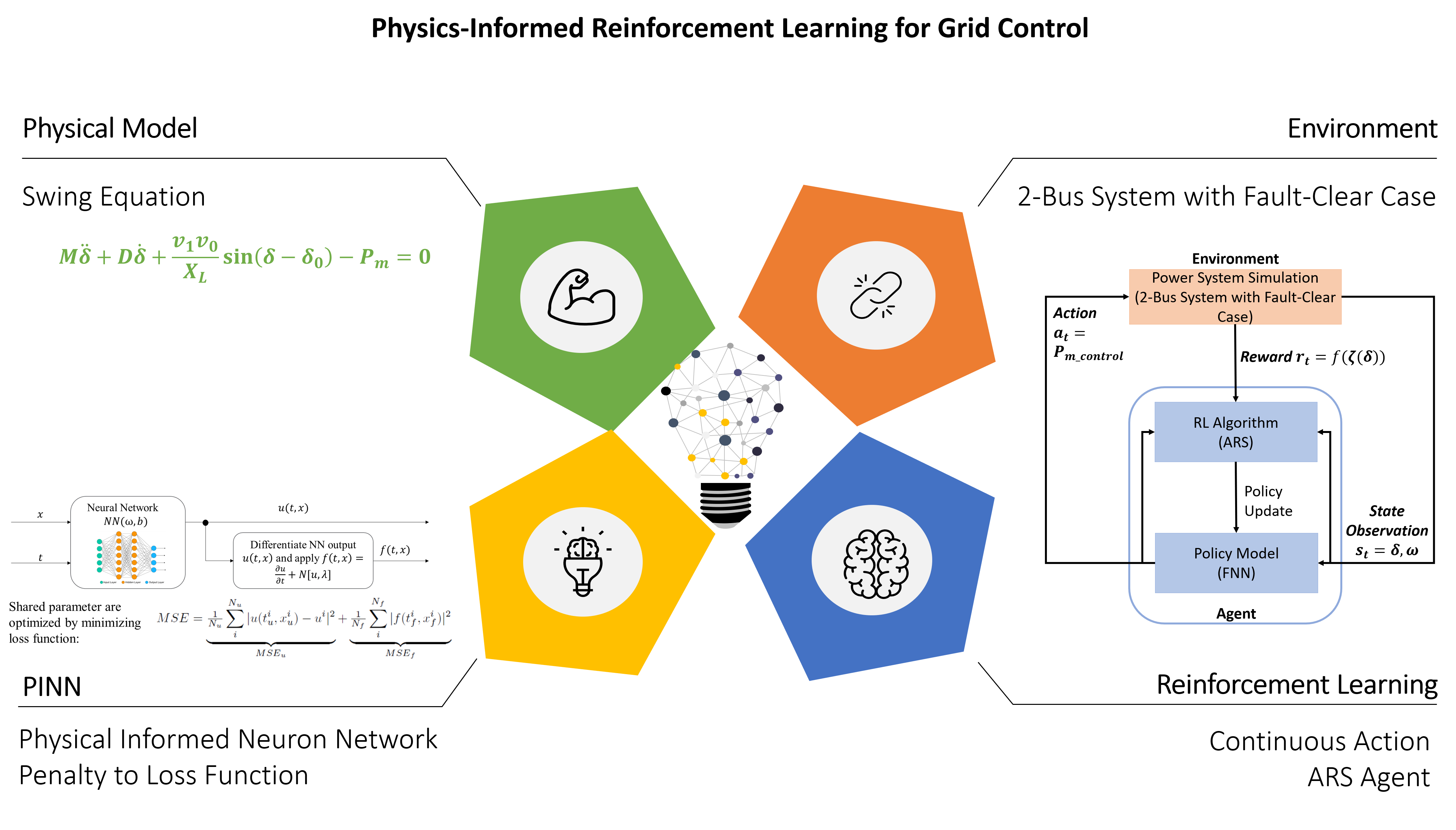

Approach

The dataset for the study is generated for the purpose of reinforcement learning for grid control. In terms of scalability and sampling efficiency, our team will apply an augmented random search (ARS) agent on a generator bus of a two-bus system environment hosting the swing-equation simulation tool. The selected generator’s rotor angle and speed will remain as the observation states, which are the DRL agent’s neural network inputs. Physics-based information from the normal generator operation state formula will be provided to the reinforcement learning (RL) agent’s neural network loss function during training. Faulty conditions will also be considered.

Results

A performance comparison of the physics-informed RL agent and traditional RL agent will be completed to identify improvements in training speed, reward convergence, sampling efficiency, scalability, and transferability.

Code, Data, and Related Links

In progress.

Publications

In progress.