Using AI to Explore Possibilities in Advanced Manufacturing

A model that helps materials scientists not only predict what is, but what could be

Modeling fiber bundles in topology, like those in a torus (shown here), provides the basis for Bundle Networks.

(Image by Mike Perkins | Pacific Northwest National Laboratory)

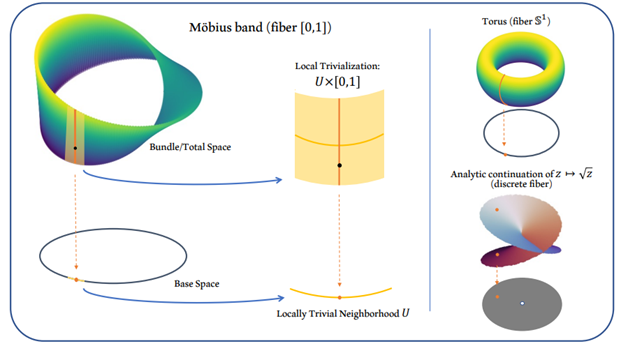

In the most familiar setting, machine learning algorithms are designed so that a user can provide the model with input and the model will make a single prediction based on that input. For example, a machine learning algorithm might be presented with many different pictures of animals and asked to predict the species (“cat”, “dog”, etc.) present in each picture. This many-to-one task can be reversed by starting with a specific output label, e.g., “dog”, and asking for every possible input that would lead to this output. Or in other words, asking the computer to “show all possible images that would be predicted as containing a dog”. Mathematicians call the set of all such images the fiber of the label “dog”.

A version of this problem can be used to accelerate research in materials manufacturing. In this field, a range of different material processing conditions can yield materials with the same material properties. Imagine the case where there are a few different recipes that all yield a cake with the same delicious consistency. Each recipe can have different combinations of ingredients, baking time, and baking temperature, but all recipes would result in the same cake. These recipes are the fiber of the cake.

Similarly, materials scientists want to know all the different recipes they can use to produce their specific material. Exploring the fiber containing all their options, they can choose the individual recipe that works best for their manufacturing set-up, time, and resource constraints. This problem is particularly important in advanced manufacturing processes, including innovative solid phase processing techniques such as the Shear Assisted Process and Extrusion (ShAPE) method developed at Pacific Northwest National Laboratory, since physics-based models are emerging for these cutting-edge approaches.

Inspired by this problem, researchers from Pacific Northwest National Laboratory and the University of Washington developed a new deep learning-based model, Bundle Networks, to try to invert such many-to-one type problems. Their research was presented at the Tenth International Conference on Learning Representations, a prestigious publishing venue in machine learning.

“Our world is full of many-to-one problems where many inputs can yield the same output,” said Henry Kvinge, corresponding author of this study. “While we have good tools in machine learning for predicting output from input, methods for learning all the different input that can yield a specific output are lacking. The aim of Bundle Networks was to fill this gap.”

Machine learning has recently seen dramatic growth in “generative models” which, rather than simply making predictions, can actually generate novel content. Bundle Networks takes some of the expressive power of these types of approaches and puts it into a framework that is useful for studying scientific processes.

Based on deep ideas from topology, Bundle Networks models the fibers of a task by breaking the input space into neighborhoods. It then learns more about each neighborhood based on additional variations in the data.

Though Bundle Networks is still in its early stages of development, when it comes to learning to invert many-to-one processes, it surpasses general purpose models, including various flavors of generative adversarial networks. This network shows promising results that can help domain scientists better model and understand their data.

This work was supported by the Mathematics for Artificial Reasoning in Science (MARS) initiative, a Laboratory Directed Research and Development program at Pacific Northwest National Laboratory.

Published: June 30, 2022

Courts, N. and H. Kvinge. 2021. "Bundle Networks: Fiber Bundles, Local Trivializations, and a Generative Approach to Exploring Many-to-One Maps (Version 3)." arXiv. https://doi.org/10.48550/ARXIV.2110.06983