Neural Networks, Kernel Machine Models Spotlighted at International Conference

Strengthening how neural networks “think” and make decisions

Composite image by Shannon Colson | Pacific Northwest National Laboratory

Scientists at Pacific Northwest National Laboratory (PNNL) are highlighting the latest research on artificial intelligence (AI) related to neural networks and modeling accuracy.

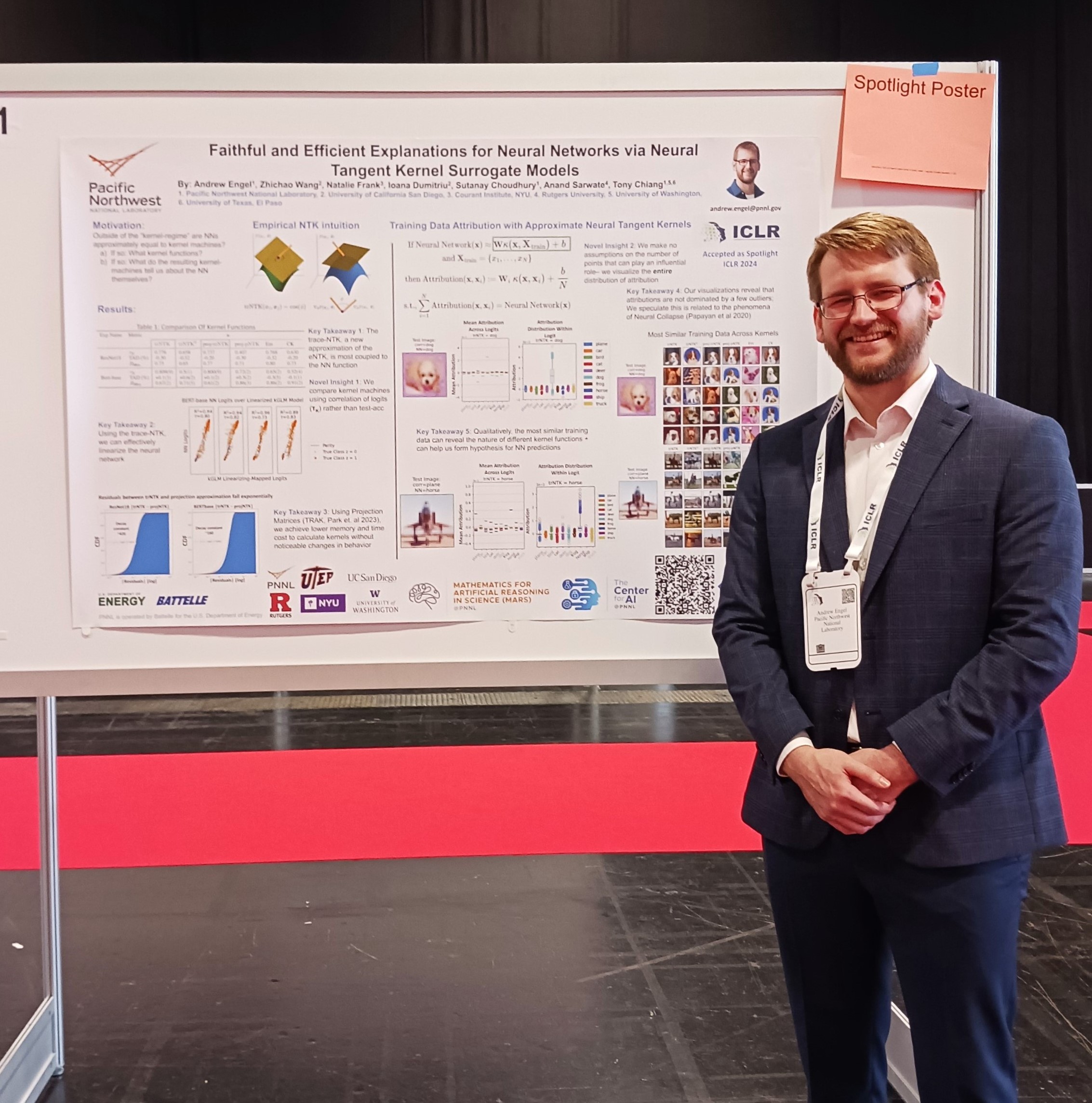

PNNL’s Andrew Engel, Tony Chiang, and Sutanay Choudhury, along with researchers from collaborating universities, presented “Faithful and Efficient Explanations for Neural Networks via Neural Tangent Kernel Surrogate Models” at the 12th International Conference on Learning Representations (ICLR), May 7–11, 2024, in Vienna, Austria.

The co-presenters represent the University of California San Diego; the Courant Institute at New York University; Rutgers University; the University of Washington; and the University of Texas, El Paso.

“Auditing” artificial intelligence

At ICLR, the research is featured in a spotlight poster presentation.

A subset of AI, neural networks are machine learning models that make decisions by mimicking the way the human brain works. In this research, kernel machines, specifically neural tangent kernels, can be surrogates for deep neural networks.

“We still do not know how neural networks ‘think’ or explain their decisions,” said Engel. “Our work seeks to find a way to essentially ’audit’ the outputs of these models. We use kernel machines to probe how the neural network sees the similarity between datapoints, which we can use to infer what the network has learned.”

Popping kernels

Engel emphasizes how important context is to their findings.

“Sometimes people get confused and think that AI is a kind of actual intelligence like you or me, when really it’s just a statistical learning tool,” he said. “For example, the model only has the training dataset as its model of the world. It enjoys none of the context about the world that we know. If there were any biases or spurious correlations in the training dataset, the model will most likely utilize them. Our tool helps visualize what goes wrong as the first step in correcting those issues.”

The research team found strong connections between the neural network model and an approximation to a special kernel called the empirical neural tangent kernel (eNTK), which they call the trace neural tangent kernel. The team investigated speeding up the computation further using projection matrices.

“We show that an approximation to this approximation—essentially making it even more efficient—considerably speeds up computation and allows efficient storage of the feature vector used to compute kernel functions without a noticeable drop in performance.”

The team saw how the machine learning visualizations indicated that the models were leveraging all the training data in the predicted class to make decisions.

“Essentially, prior works had assumed explanations-by-example of neural network behavior were sparse—that only a few nearby examples were necessary to explain the network behavior,” said Engel. “Our work provides evidence that this assumption needs to be reconsidered. It would appear that every single point in the training dataset generally contributes to the behavior of the model on new data.

The research is part of PNNL’s Mathematics for Artificial Reasoning in Science (MARS) initiative and the Center for AI.

PNNL at ICLR

ICLR brings together researchers dedicated to advancing representation learning, a branch of AI. Learn more about the other selected PNNL presentations at the conference.

Published: May 13, 2024