Black Lightning

PI: Jessica Baweja

Objective

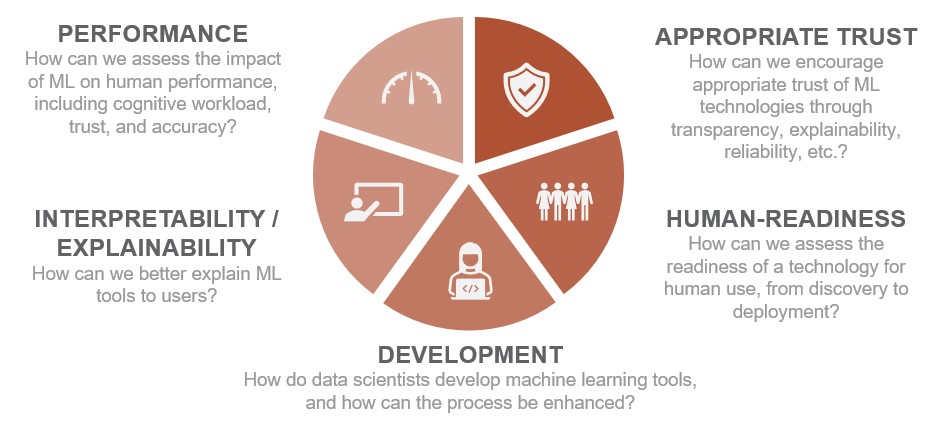

- Identify ways to better integrate artificial intelligence (AI)/machine learning (ML) into human work using human factors methods.

- Conducting applied research to understand factors that influence trust, reliability, and use of AI/ML:

- measured trust of AI/ML by power systems operators compared to traditional tools

- assess whether feature visualizations are interpretable by human users

- explore different methods for mitigating the impact of errors on trust of AI/ML

- examine ways to evaluate the human-readiness of AI/ML

Overview

Black Lightning is an effort to understand the workflow of power systems operators and how artificial intelligence and machine learning (AI/ML) can be better integrated into that domain. The power systems domain has historically faced a variety of challenges when integrating AI/ML tools. Power systems operators are generally skeptical of new tools, and tool development is often siloed from the operators and is therefore uninformed of operators’ experiences. Overall, there is a lack of focus on understanding and measuring the impact that AI/ML tools have on existing workflows.

The Black Lightning team developed a methodology to measure operator use, trust, and reliance on an AI-based contingency analysis tool designed to provide recommendations to operators performing contingency analyses. We also explored human factors related to the integration of AI/ML into work applications. This project contributes to a deeper understanding of the ways that humans develop and work with AI/ML, particularly regarding how human factors can contribute to the development of AI/ML informed by end users’ needs, preferences, and workflow and that is therefore more likely to be used and trusted.

Impact

- Evaluation of trust of AI/ML will help to develop and design training for users and stakeholders to support appropriate use.

- Frameworks for ethical, safe, and responsible use of AI/ML—especially generative AI—will help to manage the use of AI/ML appropriately with human considerations in mind.

- Human factors research will create more human-ready AI from early data science research to deployment .

- Human subjects studies will create better interpretability and explainability tools for users.

Publications and Presentations

- Baweja, J. A., Fallon, C. K., & Jefferson, B. A. (2023). “Opportunities for human factors in machine learning.” Frontiers in Artificial Intelligence.

- Anderson, A.A. et al. (2023). “Human-Centric Contingency Analysis Metrics for Evaluating Operator Performance and Trust,” in IEEE Access, vol. 11, pp. 109689-109707, 2023

- Baweja J.A., and B.A. Jefferson. 05/10/2023. "Human Factors in Technology Discovery." Presented by J.A. Baweja at Human Factors Symposium, Richland, Washington.

- Jefferson B.A., C.K. Fallon, and J.A. Baweja. 07/24/2023. "Developing Confidence in Machine Learning Results." Presented by J.A. Baweja at Applied Factors and Ergonomics Conference, San Francisco, California.

- Jefferson B.A., and J.A. Baweja. 07/22/2023. "Human Factors in Discovery Phase of TRLs and HRLs." Presented by B.A. Jefferson at Applied Human Factors and Ergonomics, San Francisco, California.

- Wenskovitch J.E., B.A. Jefferson, J.A. Baweja, C. Fallon, D.K. Ciesielski, A.A. Anderson, and S. Kincic, et al. 07/28/2022. "Operator Insights and Usability Evaluation of Machine Learning Assistance for Power Grid Contingency Analysis." Presented by B.A. Jefferson at 13th International Conference on Applied Human Factors and Ergonomics, New York, New York.