Technology Overview

Our innovative technology enhances the transparency of machine learning models with a novel example-based explanation technique. This approach not only makes machine learning predictions more interpretable but also maintains scalability for high-dimensional data, enhancing appropriate trust in automated systems. This solution is immediately relevant for potential licensees interested in deploying reliable and explainable artificial intelligence (AI) systems.

The increasing complexity and deployment of machine learning models across various industries pose significant trust and reliability challenges. Users often struggle to understand the classification decisions made by these models, which can lead to misuse or underutilization. The novel solution developed at Pacific Northwest National Laboratory overcomes these barriers.

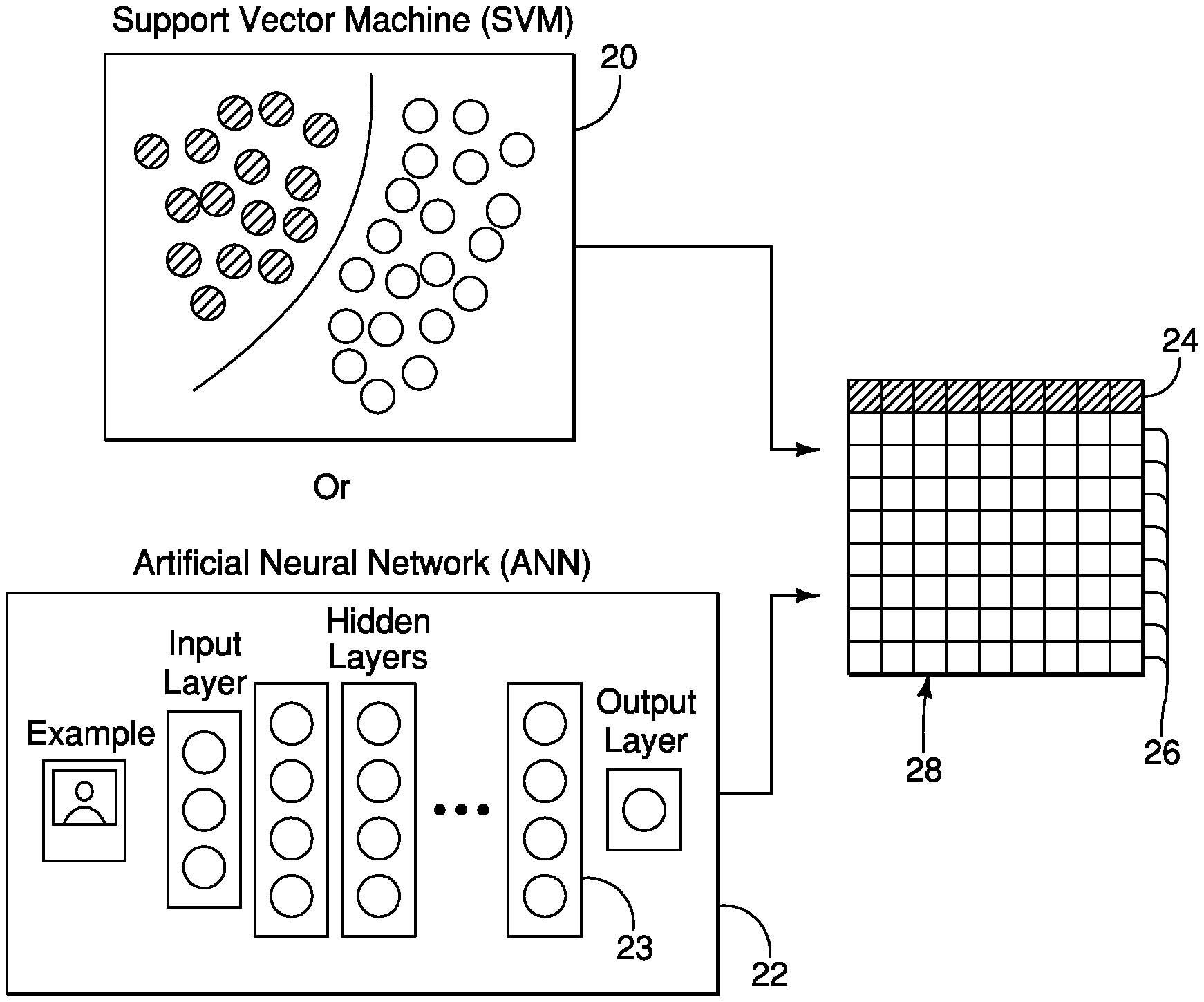

Our technology uses a graph algorithm to identify relevant training instances that can explain a particular model decision for machine learning classifiers. The approach recommends examples from the training data that are similar to an input instance provided by the user. These examples are normative (of the same class as the input) or comparative (of a different class). Our algorithm relates these recommended instances using a graph, which can be presented to the user in different ways to support better explanation. Paths within the graph of recommended examples explain to the user how realistic modifications to the input instance affects the classifier's predictions.

We conducted a rigorous human subjects study that showed when people use escape routes, the accuracy of the “human + model system” exceeded the accuracy of the human or model alone. Our technology provides a transparent and comprehensible visualization of model decisions. Potential economic impacts include improved decision-making reliability and enhanced user trust, leading to broader adoption of machine learning technologies.

APPLICABILITY

This technology can be utilized across various industries where machine learning models are employed, including healthcare, finance, autonomous systems, and cybersecurity. Potential users include data scientists, industry professionals, and manufacturers looking to integrate explainable AI into their workflow.

Advantages

- Scalability: Effectively scales to high-dimensional data

- Transparency: Provides clear and interpretable explanations of machine learning model decisions

- Versatility: Applicable to a wide range of machine learning models and industries

- User-friendly: Designed to be intuitive for non-experts, facilitating broader adoption and trust calibration

For more information, please contact: commercialization@pnnl.gov