Advanced Computing, Mathematics and Data

Research Highlights

February 2016

Separate Paths to ‘Parallel‘ Solutions

Three IPDPS papers to showcase novel solutions for improving HPC processing

The best approach for solving big problems often can be by making them smaller, more manageable. This is the general premise of parallel computing, where multiple calculations can be broken down and conducted simultaneously, and it has been especially beneficial to high-performance computing, including the massively parallel leadership computing systems used within the U.S. Department of Energy complex. PNNL computer scientists continue to advance parallel computing research and, again, will share their work as part of the upcoming International Parallel & Distributed Processing Symposium, known as IPDPS, the distinguished international forum for engineers and scientists to present their research on parallel computing.

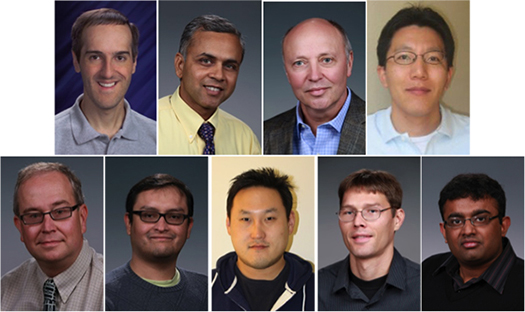

(Clockwise from top left): Daniel Chavarría-Miranda, Nitin Gawande, Adolfy Hoisie, Seung-Hwa Kang, Abhinav Vishnu, Nathan Tallent, Shuaiwen Leon Song, Joseph Manzano and Darren Kerbyson will all have papers featured at IPDPS 2016.

Enlarge Image.

Among the 114 papers accepted for presentation at IPDPS 2016 (out of 496 submitted), three were authored by members of PNNL’s Advanced Computing, Mathematics, and Data Division High Performance Computing group. The papers address aspects related to fault modeling using machine learning, an analytic model for parallel machines, and a benchmark suite that can help exploit power-efficient parallelism.

In “Fault Modeling of Extreme-scale Applications using Machine Learning,” a team including primary author, Abhinav Vishnu, with Nathan Tallent, Darren Kerbyson, Adolfy Hoisie (all PNNL), and Hubertus van Dam (Brookhaven National Laboratory), take on faults, common in large-scale systems that ultimately can compromise their operation. In their work, the authors introduce a fault modeling method that classifies a system or application fault as “innocuous” or “error” using machine-learning algorithms. Their specific goal was to answer the question: given a multi-bit fault in main memory, will it result in an error or can it be safely ignored? Their method correctly classified 99 percent of the error cases for faults and more than 60 percent for faults that did not result in errors.

“Being able to create fault models of applications will allow multi-bit memory faults to be classified as either error or innocuous at runtime, potentially preventing unnecessary execution of recovery algorithms,” Vishnu, a senior scientist with ACMD Division’s HPC group, said. “Our method showed that when a double-bit fault occurs, the application only needs to execute a recovery algorithm roughly 40 percent of the time.”

As co-authors, HPC scientists Shuaiwen Leon Song and Daniel Chavarría-Miranda partnered with researchers from Eindhoven University of Technology (The Netherlands) and Technische Universität Dresden (Germany) to address potential bottlenecks, discern potential optimizations, and derive novel intuitions using “X: A Comprehensive Analytic Model for Parallel Machines.” The X-model can comprehensively investigate the combined effects of various types of parallelism based on a machine’s spatial state. It then draws an intuitive figure—an “X-graph”—to identify performance bottlenecks and find optimizations.

“The X-graph allows researchers to ‘see’ if an optimization scenario actually is effective—and identify why,” Song explained. “The X-model can help researchers determine limiting factors and show a technique’s effectiveness, as well as provide a viable way to explore further optimizations. Compared to other analytical models, the X-model is more dynamic and flexible, making it better suited for analyzing complex computing architectures.”

Tallent also serves as the primary author of “Algorithm and Architecture Independent Benchmarking with SEAK,” co-authored with Joseph Manzano, Nitin Gawande, Seung-Hwa Kang, Kerbyson, and Hoisie (all from PNNL) and Joseph Cross of the Defense Advanced Research Projects Agency (DARPA). SEAK, the Suite for Embedded Applications & Kernels, is a new benchmark suite that captures key constraining problems—application bottlenecks due to performance or power constraints—within several high-performance embedded applications.

“SEAK differs from other benchmark suites in that constraining problems should be specified so that a potential solution can be any combination of software and hardware that meets the problem’s inputs and outputs. That means both algorithms and architectures, the typical focus of benchmark suites, are variables that must be unspecified.” Tallent explained. “The goal is to encourage creative solutions at different parts of the performance-power-accuracy trade-off curve. These solutions will enable flexible and efficient design of new embedded platforms for Department of Defense missions.”

In an initial effort to characterize high-performance embedded space using a goal-oriented specification, 12 SEAK constraining problems already have been developed.

“IPDPS provides a high-level forum to showcase the leading-edge in parallel computing, making it especially notable that PNNL’s contribution is almost three percent at this top conference in the HPC arena,” said Kerbyson, the Associate Division Director of PNNL’s HPC group. “I am sincerely proud of the HPC group members who continue to be recognized for their excellent research.

“I also am pleased that scientists from our overall team, including Daniel, Leon, Nathan, and Abhinav along with Mahantesh Halappanavar, Sriram Krishnamoorthy, Ken Roche, and Antonino Tumeo, as well as Edoardo Apra from EMSL and Ryan LaMothe from PNNL’s National Security Directorate, are further representing PNNL’s computing leadership by serving on IPDPS’ Technical Program Committee,” he concluded.

IPDPS is sponsored by the Institute of Electrical and Electronics Engineers (IEEE) Computer Society with support from IEEE Computer Society’s Computer Architecture and Distributed Processing technical committees. IPDPS 2016, to be held in Chicago on May 23-27, marks the 30th year of the symposium.

References

- Li A, SL Song, A Kumar, D Chavarría-Miranda, and H Corporaal. 2016. “X: A Comprehensive Analytic Model for Parallel Machines.”

- Tallent NR, JB Manzano, NA Gawande, S-H Kang, DJ Kerbyson, A Hoisie, and JK Cross. 2016. “Algorithm and Architecture Independent Benchmarking with SEAK.”

- Vishnu A, H van Dam, N Tallent, D Kerbyson, and A Hoisie. 2016. “Fault Modeling of Extreme-scale Applications using Machine Learning.”